Estimated reading time: 15 minutes

- Docker Pull Windows 10 License Key

- Docker Pull Windows 10 Product Key

- Docker Pull Windows 10 64-bit

- Docker Pull Windows 10 Pro

- Docker Pull Proxy Windows 10

- Docker Pull Windows 10

Build and Run Your First Windows Server Container (Blog Post) gives a quick tour of how to build and run native Docker Windows containers on Windows 10 and Windows Server 2016 evaluation releases. Getting Started with Windows Containers (Lab) shows you how to use the MusicStore application with Windows containers. If your docker is running windows containers, and then if you try to fetch a linux based container such as nginx, like so. Docker pull nginx:latest you will get a message as follows. Latest: Pulling from library/nginx no matching manifest for windows/amd64 10.0.18363 in the manifest list entries So switch to linux contaners. See the very first.

Welcome to Docker Desktop! The Docker Desktop for Windows user manual provides information on how to configure and manage your Docker Desktop settings.

For information about Docker Desktop download, system requirements, and installation instructions, see Install Docker Desktop.

Settings

The Docker Desktop menu allows you to configure your Docker settings such as installation, updates, version channels, Docker Hub login,and more.

This section explains the configuration options accessible from the Settings dialog.

Open the Docker Desktop menu by clicking the Docker icon in the Notifications area (or System tray):

Select Settings to open the Settings dialog:

General

On the General tab of the Settings dialog, you can configure when to start and update Docker.

Start Docker when you log in - Automatically start Docker Desktop upon Windows system login.

Expose daemon on tcp://localhost:2375 without TLS - Click this option to enable legacy clients to connect to the Docker daemon. You must use this option with caution as exposing the daemon without TLS can result in remote code execution attacks.

Send usage statistics - By default, Docker Desktop sends diagnostics,crash reports, and usage data. This information helps Docker improve andtroubleshoot the application. Clear the check box to opt out. Docker may periodically prompt you for more information.

Resources

The Resources tab allows you to configure CPU, memory, disk, proxies, network, and other resources. Different settings are available for configuration depending on whether you are using Linux containers in WSL 2 mode, Linux containers in Hyper-V mode, or Windows containers.

Advanced

Note

The Advanced tab is only available in Hyper-V mode, because in WSL 2 mode and Windows container mode these resources are managed by Windows. In WSL 2 mode, you can configure limits on the memory, CPU, and swap size allocatedto the WSL 2 utility VM.

Use the Advanced tab to limit resources available to Docker.

CPUs: By default, Docker Desktop is set to use half the number of processorsavailable on the host machine. To increase processing power, set this to ahigher number; to decrease, lower the number.

Memory: By default, Docker Desktop is set to use 2 GB runtime memory,allocated from the total available memory on your machine. To increase the RAM, set this to a higher number. To decrease it, lower the number.

Swap: Configure swap file size as needed. The default is 1 GB.

Disk image size: Specify the size of the disk image.

Disk image location: Specify the location of the Linux volume where containers and images are stored.

You can also move the disk image to a different location. If you attempt to move a disk image to a location that already has one, you get a prompt asking if you want to use the existing image or replace it.

File sharing

Note

The File sharing tab is only available in Hyper-V mode, because in WSL 2 mode and Windows container mode all files are automatically shared by Windows.

Use File sharing to allow local directories on Windows to be shared with Linux containers.This is especially useful forediting source code in an IDE on the host while running and testing the code in a container.Note that configuring file sharing is not necessary for Windows containers, only Linux containers. If a directory is not shared with a Linux container you may get file not found or cannot start service errors at runtime. See Volume mounting requires shared folders for Linux containers.

File share settings are:

Add a Directory: Click

+and navigate to the directory you want to add.Apply & Restart makes the directory available to containers using Docker’sbind mount (

-v) feature.

Tips on shared folders, permissions, and volume mounts

Share only the directories that you need with the container. File sharing introduces overhead as any changes to the files on the host need to be notified to the Linux VM. Sharing too many files can lead to high CPU load and slow filesystem performance.

Shared folders are designed to allow application code to be edited on the host while being executed in containers. For non-code items such as cache directories or databases, the performance will be much better if they are stored in the Linux VM, using a data volume (named volume) or data container.

Docker Desktop sets permissions to read/write/execute for users, groups and others 0777 or a+rwx.This is not configurable. See Permissions errors on data directories for shared volumes.

Windows presents a case-insensitive view of the filesystem to applications while Linux is case-sensitive. On Linux it is possible to create 2 separate files:

testandTest, while on Windows these filenames would actually refer to the same underlying file. This can lead to problems where an app works correctly on a developer Windows machine (where the file contents are shared) but fails when run in Linux in production (where the file contents are distinct). To avoid this, Docker Desktop insists that all shared files are accessed as their original case. Therefore if a file is created calledtest, it must be opened astest. Attempts to openTestwill fail with “No such file or directory”. Similarly once a file calledtestis created, attempts to create a second file calledTestwill fail.

Shared folders on demand

You can share a folder “on demand” the first time a particular folder is used by a container.

If you run a Docker command from a shell with a volume mount (as shown in theexample below) or kick off a Compose file that includes volume mounts, you get apopup asking if you want to share the specified folder.

You can select to Share it, in which case it is added your Docker Desktop Shared Folders list and available tocontainers. Alternatively, you can opt not to share it by selecting Cancel.

Proxies

Docker Desktop lets you configure HTTP/HTTPS Proxy Settings andautomatically propagates these to Docker. For example, if you set your proxysettings to http://proxy.example.com, Docker uses this proxy when pulling containers.

Your proxy settings, however, will not be propagated into the containers you start.If you wish to set the proxy settings for your containers, you need to defineenvironment variables for them, just like you would do on Linux, for example:

For more information on setting environment variables for running containers,see Set environment variables.

Network

Note

The Network tab is not available in Windows container mode because networking is managed by Windows.

You can configure Docker Desktop networking to work on a virtual private network (VPN). Specify a network address translation (NAT) prefix and subnet mask to enable Internet connectivity.

DNS Server: You can configure the DNS server to use dynamic or static IP addressing.

Note

Some users reported problems connecting to Docker Hub on Docker Desktop. This would manifest as an error when trying to rundocker commands that pull images from Docker Hub that are not alreadydownloaded, such as a first time run of docker run hello-world. If youencounter this, reset the DNS server to use the Google DNS fixed address:8.8.8.8. For more information, seeNetworking issues in Troubleshooting.

Updating these settings requires a reconfiguration and reboot of the Linux VM.

WSL Integration

In WSL 2 mode, you can configure which WSL 2 distributions will have the Docker WSL integration.

By default, the integration will be enabled on your default WSL distribution. To change your default WSL distro, run wsl --set-default <distro name>. (For example, to set Ubuntu as your default WSL distro, run wsl --set-default ubuntu).

You can also select any additional distributions you would like to enable the WSL 2 integration on.

For more details on configuring Docker Desktop to use WSL 2, see Docker Desktop WSL 2 backend.

Docker Engine

The Docker Engine page allows you to configure the Docker daemon to determine how your containers run.

Type a JSON configuration file in the box to configure the daemon settings. For a full list of options, see the Docker Enginedockerd commandline reference.

Click Apply & Restart to save your settings and restart Docker Desktop.

Command Line

On the Command Line page, you can specify whether or not to enable experimental features.

You can toggle the experimental features on and off in Docker Desktop. If you toggle the experimental features off, Docker Desktop uses the current generally available release of Docker Engine.

Experimental features

Experimental features provide early access to future product functionality.These features are intended for testing and feedback only as they may changebetween releases without warning or can be removed entirely from a futurerelease. Experimental features must not be used in production environments.Docker does not offer support for experimental features.

For a list of current experimental features in the Docker CLI, see Docker CLI Experimental features.

Run docker version to verify whether you have enabled experimental features. Experimental modeis listed under Server data. If Experimental is true, then Docker isrunning in experimental mode, as shown here:

Kubernetes

Note

The Kubernetes tab is not available in Windows container mode.

Docker Desktop includes a standalone Kubernetes server that runs on your Windows machince, sothat you can test deploying your Docker workloads on Kubernetes. To enable Kubernetes support and install a standalone instance of Kubernetes running as a Docker container, select Enable Kubernetes.

For more information about using the Kubernetes integration with Docker Desktop, see Deploy on Kubernetes.

Reset

The Restart Docker Desktop and Reset to factory defaults options are now available on the Troubleshoot menu. For information, see Logs and Troubleshooting.

Troubleshoot

Visit our Logs and Troubleshooting guide for more details.

Log on to our Docker Desktop for Windows forum to get help from the community, review current user topics, or join a discussion.

Log on to Docker Desktop for Windows issues on GitHub to report bugs or problems and review community reported issues.

For information about providing feedback on the documentation or update it yourself, see Contribute to documentation.

Switch between Windows and Linux containers

From the Docker Desktop menu, you can toggle which daemon (Linux or Windows)the Docker CLI talks to. Select Switch to Windows containers to use Windowscontainers, or select Switch to Linux containers to use Linux containers(the default).

For more information on Windows containers, refer to the following documentation:

Microsoft documentation on Windows containers.

Build and Run Your First Windows Server Container (Blog Post)gives a quick tour of how to build and run native Docker Windows containers on Windows 10 and Windows Server 2016 evaluation releases.

Getting Started with Windows Containers (Lab)shows you how to use the MusicStoreapplication with Windows containers. The MusicStore is a standard .NET application and,forked here to use containers, is a good example of a multi-container application.

To understand how to connect to Windows containers from the local host, seeLimitations of Windows containers for

localhostand published ports

Settings dialog changes with Windows containers

When you switch to Windows containers, the Settings dialog only shows those tabs that are active and apply to your Windows containers:

If you set proxies or daemon configuration in Windows containers mode, theseapply only on Windows containers. If you switch back to Linux containers,proxies and daemon configurations return to what you had set for Linuxcontainers. Your Windows container settings are retained and become availableagain when you switch back.

Dashboard

The Docker Desktop Dashboard enables you to interact with containers and applications and manage the lifecycle of your applications directly from your machine. The Dashboard UI shows all running, stopped, and started containers with their state. It provides an intuitive interface to perform common actions to inspect and manage containers and Docker Compose applications. For more information, see Docker Desktop Dashboard.

Docker Hub

Select Sign in /Create Docker ID from the Docker Desktop menu to access your Docker Hub account. Once logged in, you can access your Docker Hub repositories directly from the Docker Desktop menu.

For more information, refer to the following Docker Hub topics:

Two-factor authentication

Docker Desktop enables you to sign into Docker Hub using two-factor authentication. Two-factor authentication provides an extra layer of security when accessing your Docker Hub account.

You must enable two-factor authentication in Docker Hub before signing into your Docker Hub account through Docker Desktop. For instructions, see Enable two-factor authentication for Docker Hub.

After you have enabled two-factor authentication:

Go to the Docker Desktop menu and then select Sign in / Create Docker ID.

Enter your Docker ID and password and click Sign in.

After you have successfully signed in, Docker Desktop prompts you to enter the authentication code. Enter the six-digit code from your phone and then click Verify.

After you have successfully authenticated, you can access your organizations and repositories directly from the Docker Desktop menu.

Adding TLS certificates

You can add trusted Certificate Authorities (CAs) to your Docker daemon to verify registry server certificates, and client certificates, to authenticate to registries.

How do I add custom CA certificates?

Docker Desktop supports all trusted Certificate Authorities (CAs) (root orintermediate). Docker recognizes certs stored under Trust RootCertification Authorities or Intermediate Certification Authorities.

Docker Desktop creates a certificate bundle of all user-trusted CAs based onthe Windows certificate store, and appends it to Moby trusted certificates. Therefore, if an enterprise SSL certificate is trusted by the user on the host, it is trusted by Docker Desktop.

To learn more about how to install a CA root certificate for the registry, seeVerify repository client with certificatesin the Docker Engine topics.

How do I add client certificates?

You can add your client certificatesin ~/.docker/certs.d/<MyRegistry>:<Port>/client.cert and~/.docker/certs.d/<MyRegistry>:<Port>/client.key. You do not need to push your certificates with git commands.

When the Docker Desktop application starts, it copies the~/.docker/certs.d folder on your Windows system to the /etc/docker/certs.ddirectory on Moby (the Docker Desktop virtual machine running on Hyper-V).

You need to restart Docker Desktop after making any changes to the keychainor to the ~/.docker/certs.d directory in order for the changes to take effect.

The registry cannot be listed as an insecure registry (seeDocker Daemon). Docker Desktop ignorescertificates listed under insecure registries, and does not send clientcertificates. Commands like docker run that attempt to pull from the registryproduce error messages on the command line, as well as on the registry.

To learn more about how to set the client TLS certificate for verification, seeVerify repository client with certificatesin the Docker Engine topics.

Where to go next

Try out the walkthrough at Get Started.

Dig in deeper with Docker Labs example walkthroughs and source code.

Refer to the Docker CLI Reference Guide.

- Using Windows containers

- Networking

- Build directory in service

- The builds and cache storage

- The persistent storage

- The privileged mode

- How pull policies work

GitLab Runner can use Docker to run jobs on user provided images. This ispossible with the use of Docker executor.

The Docker executor when used with GitLab CI, connects to Docker Engineand runs each build in a separate and isolated container using the predefinedimage that is set up in .gitlab-ci.yml and in accordance inconfig.toml.

That way you can have a simple and reproducible build environment that can alsorun on your workstation. The added benefit is that you can test all thecommands that we will explore later from your shell, rather than having to testthem on a dedicated CI server.

The following configurations are supported:| Runner is installed on: | Executor is: | Container is running: |

|---|---|---|

| Windows | docker-windows | Windows |

| Windows | docker | Linux |

| Linux | docker | Linux |

not supported:

not supported:| Runner is installed on: | Executor is: | Container is running: |

|---|---|---|

| Linux | docker-windows | Linux |

| Linux | docker | Windows |

| Linux | docker-windows | Windows |

| Windows | docker | Windows |

| Windows | docker-windows | Linux |

1.13.0,on Windows Server it needs to be more recentto identify the Windows Server version.Using Windows containers

To use Windows containers with the Docker executor, note the followinginformation about limitations, supported Windows versions, andconfiguring a Windows Docker executor.

Nanoserver support

Introduced in GitLab Runner 13.6.

With the support for Powershell Core introduced in the Windows helper image, it is now possible to leveragethe nanoserver variants for the helper image.

Limitations

The following are some limitations of using Windows containers withDocker executor:

- Docker-in-Docker is not supported, since it’s notsupported byDocker itself.

- Interactive web terminals are not supported.

- Host device mounting not supported.

- When mounting a volume directory it has to exist, or Docker will failto start the container, see#3754 foradditional detail.

docker-windowsexecutor can be run only using GitLab Runner runningon Windows.- Linux containers onWindowsare not supported, since they are still experimental. Read therelevantissue formore details.

Because of a limitation inDocker,if the destination path drive letter is not

c:, paths are not supported for:This means values such as

f:cache_dirare not supported, butf:is supported.However, if the destination path is on thec:drive, paths are also supported(for examplec:cache_dir).

Supported Windows versions

GitLab Runner only supports the following versions of Windows whichfollows our support lifecycle forWindows:

- Windows Server 2004.

- Windows Server 1909.

- Windows Server 1903.

- Windows Server 1809.

For future Windows Server versions, we have a future version supportpolicy.

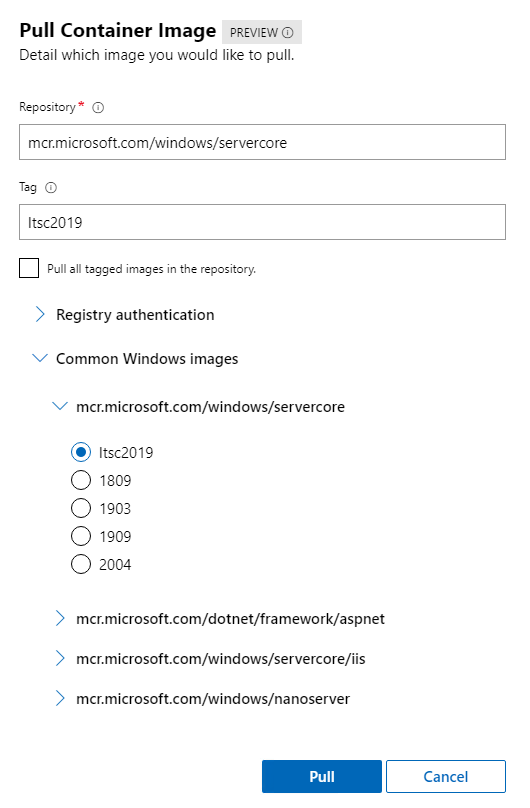

You can only run containers based on the same OS version that the Dockerdaemon is running on. For example, the following Windows ServerCore images canbe used:

mcr.microsoft.com/windows/servercore:2004mcr.microsoft.com/windows/servercore:2004-amd64mcr.microsoft.com/windows/servercore:1909mcr.microsoft.com/windows/servercore:1909-amd64mcr.microsoft.com/windows/servercore:1903mcr.microsoft.com/windows/servercore:1903-amd64mcr.microsoft.com/windows/servercore:1809mcr.microsoft.com/windows/servercore:1809-amd64mcr.microsoft.com/windows/servercore:ltsc2019

Supported Docker versions

A Windows Server running GitLab Runner must be running a recent version of Dockerbecause GitLab Runner uses Docker to detect what version of Windows Server is running.

A combination known not to work with GitLab Runner is Docker 17.06and Server 1909. Docker does not identify the version of Windows Serverresulting in the following error:

This error should contain the Windows Server version. If you get this error,with no version specified, upgrade Docker. Try a Docker version of similar age,or later, than the Windows Server release.

Read more about troubleshooting this.

Configuring a Windows Docker executor

c:cacheas a source directory when passing the --docker-volumes orDOCKER_VOLUMES environment variable, there is aknown issue.Below is an example of the configuration for a simple Dockerexecutor running Windows.

For other configuration options for the Docker executor, see theadvancedconfigurationsection.

Docker Pull Windows 10 License Key

Services

You can use services byenabling network per-build networking mode.Availablesince GitLab Runner 12.9.

Workflow

The Docker executor divides the job into multiple steps:

- Prepare: Create and start the services.

- Pre-job: Clone, restore cacheand download artifacts from previousstages. This is run on a special Docker image.

- Job: User build. This is run on the user-provided Docker image.

- Post-job: Create cache, upload artifacts to GitLab. This is run ona special Docker Image.

The special Docker image is based on Alpine Linux and contains all the toolsrequired to run the prepare, pre-job, and post-job steps, like the Git and theGitLab Runner binaries for supporting caching and artifacts. You can find the definition ofthis special image in the official GitLab Runner repository.

The image keyword

The image keyword is the name of the Docker image that is present in thelocal Docker Engine (list all images with docker images) or any image thatcan be found at Docker Hub. For more information about images and DockerHub please read the Docker Fundamentals documentation.

In short, with image we refer to the Docker image, which will be used tocreate a container on which your build will run.

If you don’t specify the namespace, Docker implies library which includes allofficial images. That’s why you’ll seemany times the library part omitted in .gitlab-ci.yml and config.toml.For example you can define an image like image: ruby:2.6, which is a shortcutfor image: library/ruby:2.6.

Then, for each Docker image there are tags, denoting the version of the image.These are defined with a colon (:) after the image name. For example, forRuby you can see the supported tags at https://hub.docker.com/_/ruby/. If youdon’t specify a tag (like image: ruby), latest is implied.

The image you choose to run your build in via image directive must have aworking shell in its operating system PATH. Supported shells are sh,bash, and pwsh (since 13.9)for Linux, and PowerShell for Windows.GitLab Runner cannot execute a command using the underlying OS system calls(such as exec).

The services keyword

The services keyword defines just another Docker image that is run duringyour build and is linked to the Docker image that the image keyword defines.This allows you to access the service image during build time.

The service image can run any application, but the most common use case is torun a database container, e.g., mysql. It’s easier and faster to use anexisting image and run it as an additional container than install mysql everytime the project is built.

You can see some widely used services examples in the relevant documentation ofCI services examples.

If needed, you can assign an aliasto each service.

Networking

Networking is required to connect services to the build job and may also be used to run build jobs in user-definednetworks. Either legacy network_mode or per-build networking may be used.

Legacy container links

The default network mode uses Legacy container links withthe default Docker bridge mode to link the job container with the services.

network_mode can be used to configure how the networking stack is set up for the containersusing one of the following values:

- One of the standard Docker networking modes:

bridge: use the bridge network (default)host: use the host’s network stack inside the containernone: no networking (not recommended)- Any other

network_modevalue is taken as the name of an already existingDocker network, which the build container should connect to.

For name resolution to work, Docker will manipulate the /etc/hosts file in the buildjob container to include the service container hostname (and alias). However,the service container will not be able to resolve the build job containername. To achieve that, use the per-build network mode.

Linked containers share their environment variables.

Network per-build

Introduced in GitLab Runner 12.9.

This mode will create and use a new user-defined Docker bridge network per build.User-defined bridge networks are covered in detail in the Docker documentation.

Unlike legacy container links used in other network modes,Docker environment variables are not shared across the containers.

Docker networks may conflict with other networks on the host, including other Docker networks,if the CIDR ranges are already in use. The default Docker address pool can be configuredvia default-address-pool in dockerd.

To enable this mode you need to enable the FF_NETWORK_PER_BUILDfeature flag.

When a job starts, a bridge network is created (similarly to dockernetwork create <network>). Upon creation, the service container(s) and thebuild job container are connected to this network.

Both the build job container, and the service container(s) will be able toresolve each other’s hostnames (and aliases). This functionality isprovided by Docker.

The build container is resolvable via the build alias as well as it’s GitLab assigned hostname.

The network is removed at the end of the build job.

Define image and services from .gitlab-ci.yml

You can simply define an image that will be used for all jobs and a list ofservices that you want to use during build time.

It is also possible to define different images and services per job:

Define image and services in config.toml

Look for the [runners.docker] section:

The example above uses the array of tables syntax.

The image and services defined this way will be added to all builds run bythat runner, so even if you don’t define an image inside .gitlab-ci.yml,the one defined in config.toml will be used.

Define an image from a private Docker registry

Starting with GitLab Runner 0.6.0, you are able to define images located toprivate registries that could also require authentication.

All you have to do is be explicit on the image definition in .gitlab-ci.yml.

In the example above, GitLab Runner will look at my.registry.tld:5000 for theimage namespace/image:tag.

If the repository is private you need to authenticate your GitLab Runner in theregistry. Read more on using a private Docker registry.

Accessing the services

Let’s say that you need a Wordpress instance to test some API integration withyour application.

You can then use for example the tutum/wordpress as a service image in your.gitlab-ci.yml:

When the build is run, tutum/wordpress will be started first and you will haveaccess to it from your build container under the hostname tutum__wordpressand tutum-wordpress.

The GitLab Runner creates two alias hostnames for the service that you can usealternatively. The aliases are taken from the image name following these rules:

- Everything after

:is stripped. - For the first alias, the slash (

/) is replaced with double underscores (__). - For the second alias, the slash (

/) is replaced with a single dash (-).

Using a private service image will strip any port given and apply the rules asdescribed above. A service registry.gitlab-wp.com:4999/tutum/wordpress willresult in hostname registry.gitlab-wp.com__tutum__wordpress andregistry.gitlab-wp.com-tutum-wordpress.

Configuring services

Many services accept environment variables which allow you to easily changedatabase names or set account names depending on the environment.

GitLab Runner 0.5.0 and up passes all YAML-defined variables to the createdservice containers.

For all possible configuration variables check the documentation of each imageprovided in their corresponding Docker Hub page.

All variables are passed to all services containers. It’s not designed todistinguish which variable should go where.Secure variables are only passed to the build container.

Docker Pull Windows 10 Product Key

Mounting a directory in RAM

You can mount a path in RAM using tmpfs. This can speed up the time required to test if there is a lot of I/O related work, such as with databases.If you use the tmpfs and services_tmpfs options in the runner configuration, you can specify multiple paths, each with its own options. See the Docker reference for details.This is an example config.toml to mount the data directory for the official Mysql container in RAM.

Build directory in service

Since version 1.5 GitLab Runner mounts a /builds directory to all shared services.

See an issue: https://gitlab.com/gitlab-org/gitlab-runner/-/issues/1520.

PostgreSQL service example

See the specific documentation forusing PostgreSQL as a service.

MySQL service example

See the specific documentation forusing MySQL as a service.

The services health check

After the service is started, GitLab Runner waits some time for the service tobe responsive. Currently, the Docker executor tries to open a TCP connection tothe first exposed service in the service container.

You can see how it is implemented by checking this Go command.

The builds and cache storage

The Docker executor by default stores all builds in/builds/<namespace>/<project-name> and all caches in /cache (inside thecontainer).You can overwrite the /builds and /cache directories by defining thebuilds_dir and cache_dir options under the [[runners]] section inconfig.toml. This will modify where the data are stored inside the container.

If you modify the /cache storage path, you also need to make sure to mark thisdirectory as persistent by defining it in volumes = ['/my/cache/'] under the[runners.docker] section in config.toml.

Clearing Docker cache

Introduced in GitLab Runner 13.9, all created runner resources cleaned up.

GitLab Runner provides the clear-docker-cachescript to remove old containers and volumes that can unnecessarily consume disk space.

Run clear-docker-cache regularly (using cron once per week, for example),ensuring a balance is struck between:

- Maintaining some recent containers in the cache for performance.

- Reclaiming disk space.

clear-docker-cache can remove old or unused containers and volumes that are created by the GitLab Runner. For a list of options, run the script with help option:

The default option is prune-volumes which the script will remove all unused containers (both dangling and unreferenced) and volumes.

Clearing old build images

The clear-docker-cache script will not remove the Docker images as they are not tagged by the GitLab Runner. You can however confirm the space that can be reclaimed by running the script with the space option as illustrated below:

Once you have confirmed the reclaimable space, run the docker system prune command that will remove all unused containers, networks, images (both dangling and unreferenced), and optionally, volumes that are not tagged by the GitLab Runner.

The persistent storage

The Docker executor can provide a persistent storage when running the containers.All directories defined under volumes = will be persistent between builds.

The volumes directive supports two types of storage:

<path>- the dynamic storage. The<path>is persistent between subsequentruns of the same concurrent job for that project. The data is attached to acustom cache volume:runner-<short-token>-project-<id>-concurrent-<concurrency-id>-cache-<md5-of-path>.<host-path>:<path>[:<mode>]- the host-bound storage. The<path>isbound to<host-path>on the host system. The optional<mode>can specifythat this storage is read-only or read-write (default).

The persistent storage for builds

If you make the /builds directory a host-bound storage, your builds will be stored in:/builds/<short-token>/<concurrent-id>/<namespace>/<project-name>, where:

<short-token>is a shortened version of the Runner’s token (first 8 letters)<concurrent-id>is a unique number, identifying the local job ID on theparticular runner in context of the project

The privileged mode

The Docker executor supports a number of options that allows fine-tuning of thebuild container. One of these options is the privileged mode.

Use Docker-in-Docker with privileged mode

The configured privileged flag is passed to the build container and allservices, thus allowing to easily use the Docker-in-Docker approach.

First, configure your runner (config.toml) to run in privileged mode:

Then, make your build script (.gitlab-ci.yml) to use Docker-in-Dockercontainer:

The ENTRYPOINT

The Docker executor doesn’t overwrite the ENTRYPOINT of a Docker image.

That means that if your image defines the ENTRYPOINT and doesn’t allow runningscripts with CMD, the image will not work with the Docker executor.

Docker Pull Windows 10 64-bit

With the use of ENTRYPOINT it is possible to create special Docker image thatwould run the build script in a custom environment, or in secure mode.

You may think of creating a Docker image that uses an ENTRYPOINT that doesn’texecute the build script, but does execute a predefined set of commands, forexample to build the Docker image from your directory. In that case, you canrun the build container in privileged mode, and makethe build environment of the runner secure.

Consider the following example:

Create a new Dockerfile:

Create a bash script (

entrypoint.sh) that will be used as theENTRYPOINT:Push the image to the Docker registry.

Run Docker executor in

privilegedmode. Inconfig.tomldefine:In your project use the following

.gitlab-ci.yml:

This is just one of the examples. With this approach the possibilities arelimitless.

How pull policies work

When using the docker or docker+machine executors, you can set thepull_policy parameter in the runner config.toml file as described in the configuration docs’Docker section.

This parameter defines how the runner works when pulling Docker images (for both image and services keywords).You can set it to a single value, or a list of pull policies, which will be attempted in orderuntil an image is pulled successfully.

If you don’t set any value for the pull_policy parameter, thenthe runner will use the always pull policy as the default value.

Now let’s see how these policies work.

Using the never pull policy

The never pull policy disables images pulling completely. If you set thepull_policy parameter of a runner to never, then users will be ableto use only the images that have been manually pulled on the Docker hostthe runner runs on.

If an image cannot be found locally, then the runner will fail the buildwith an error similar to:

When to use this pull policy?

This pull policy should be used if you want or need to have a fullcontrol on which images are used by the runner’s users. It is a good choicefor private runners that are dedicated to a project where only specific imagescan be used (not publicly available on any registries).

When not to use this pull policy?

This pull policy will not work properly with most of auto-scaledDocker executor use cases. Because of how auto-scaling works, the neverpull policy may be usable only when using a pre-defined cloud instanceimages for chosen cloud provider. The image needs to contain installedDocker Engine and local copy of used images.

Using the if-not-present pull policy

When the if-not-present pull policy is used, the runner will first checkif the image is present locally. If it is, then the local version ofimage will be used. Otherwise, the runner will try to pull the image.

When to use this pull policy?

This pull policy is a good choice if you want to use images pulled fromremote registries, but you want to reduce time spent on analyzing imagelayers difference when using heavy and rarely updated images.In that case, you will need once in a while to manually remove the imagefrom the local Docker Engine store to force the update of the image.

It is also the good choice if you need to use images that are builtand available only locally, but on the other hand, also need to allow topull images from remote registries.

When not to use this pull policy?

This pull policy should not be used if your builds use images thatare updated frequently and need to be used in most recent versions.In such a situation, the network load reduction created by this policy maybe less worthy than the necessity of the very frequent deletion of localcopies of images.

This pull policy should also not be used if your runner can be used bydifferent users which should not have access to private images usedby each other. Especially do not use this pull policy for shared runners.

To understand why the if-not-present pull policy creates security issueswhen used with private images, read thesecurity considerations documentation.

Using the always pull policy

The always pull policy will ensure that the image is always pulled.When always is used, the runner will try to pull the image even if a localcopy is available. The caching semanticsof the underlying image provider make this policy efficient.The pull attempt is fast because all image layers are cached.

If the image is not found, then the build will fail with an error similar to:

When using the always pull policy in GitLab Runner versions older than v1.8, it couldfall back to the local copy of an image and print a warning:

This was changed in GitLab Runner v1.8.

When to use this pull policy?

This pull policy should be used if your runner is publicly availableand configured as a shared runner in your GitLab instance. It is theonly pull policy that can be considered as secure when the runner willbe used with private images.

This is also a good choice if you want to force users to always usethe newest images.

Docker Pull Windows 10 Pro

Also, this will be the best solution for an auto-scaledconfiguration of the runner.

When not to use this pull policy?

This pull policy will definitely not work if you need to use locallystored images. In this case, the runner will skip the local copy of the imageand try to pull it from the remote registry. If the image was built locallyand doesn’t exist in any public registry (and especially in the defaultDocker registry), the build will fail with:

Using multiple pull policies

Introduced in GitLab Runner 13.8.

The pull_policy parameter allows you to specify a list of pull policies.The policies in the list will be attempted in order from left to right until a pull attemptis successful, or the list is exhausted.

When to use multiple pull policies?

This functionality can be useful when the Docker registry is not availableand you need to increase job resiliency.If you use the always policy and the registry is not available, the job fails even if the desired image is cached locally.

To overcome that behavior, you can add additional fallback pull policiesthat execute in case of failure.By adding a second pull policy value of if-not-present, the runner finds any locally-cached Docker image layers:

Any failure to fetch the Docker image causes the runner to attempt the following pull policy.Examples include an HTTP 403 Forbidden or an HTTP 500 Internal Server Error response from the repository.

Note that the security implications mentioned in the When not to use this pull policy? sub-section of theUsing the if-not-present pull policy section still apply,so you should be aware of the security implications and read thesecurity considerations documentation.

Docker vs Docker-SSH (and Docker+Machine vs Docker-SSH+Machine)

Docker Pull Proxy Windows 10

We provided a support for a special type of Docker executor, namely Docker-SSH(and the autoscaled version: Docker-SSH+Machine). Docker-SSH uses the same logicas the Docker executor, but instead of executing the script directly, it uses anSSH client to connect to the build container.

Docker Pull Windows 10

Docker-SSH then connects to the SSH server that is running inside the containerusing its internal IP.

This executor is no longer maintained and will be removed in the near future.

Help & feedback

Docs

Edit this pageto fix an error or add an improvement in a merge request.Create an issueto suggest an improvement to this page.

Show and post commentsto review and give feedback about this page.

Product

Create an issueif there's something you don't like about this feature.Propose functionalityby submitting a feature request.

Join First Lookto help shape new features.

Feature availability and product trials

View pricingto see all GitLab tiers and features, or to upgrade.Try GitLab for freewith access to all features for 30 days.

Get Help

If you didn't find what you were looking for,search the docs.

If you want help with something specific and could use community support,post on the GitLab forum.

For problems setting up or using this feature (depending on your GitLabsubscription).